Aims, objectives and final outputs of the project

The Open Metadata Pathway or Pathfinder project will deliver a robustly validated demonstrator of the effectiveness of opening up archival catalogues to widened automated linking and discovery through embedding RDFa metadata in Archives in the M25 area (AIM25) collection level catalogue descriptions. It will also implement as part of the AIM25 system the automated publishing of the system's high quality authority metadata as open datasets. The project will include an assessment of the effectiveness of automated semantic data extraction through natural language processing tools (using GATE) and measure the effectiveness of the approach through statistical analysis and review by key stakeholders (users and archivists). All outputs of the project will be integrated into AIM25 resources and workflows, ensuring the sustainability of the benefits to the community.

Summary objectives

Standards based cataloguing with thesaurus support is both time consuming and constrained by subjective and contemporary views about subject choice and relevance. Use of automated semantic metadata extraction through natural language processing tools and Linked Data offer the possibility of upgraded harvesting and wider and more effective subject searching.

The project will deliver a robustly validated pilot embedding RDFa metadata in AIM25 archival collection catalogues, opening up archival catalogues to widened automated linking and discovery. This will include creation of metadata profiles and URI schemes and an assessment of the effectiveness of automated semantic metadata extraction through natural language processing tools (using GATE). The outputs of the project will be integrated into the AIM25 resources and workflows, ensuring that AIM25 content continues to be available in linked data form.

A large amount of accumulated authority metadata (subject terms, personal and place names, geographical names) exists in AIM25 SQL database tables and is already normalised in appropriate standard forms (e.g. NCA Rules). This is used to provide search and access points to the collection records. The project will reimplement these rich metadata resources as embedded RDFa within the online catalogues, and ensure the resulting datasets are openly available for reuse under appropriate open licensing tools (e.g ODL, GPL, Creative Commons) – in consultation with the community and the Programme Manager.

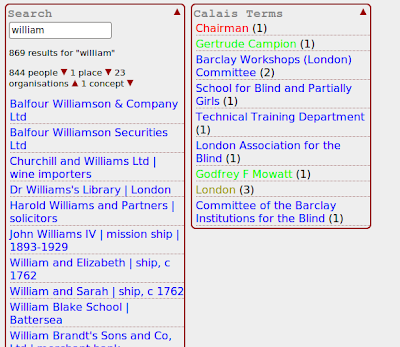

For the benefit of the data creators, workflow and input systems will be revised to support new metadata creation techniques, including authority-based. For the benefit of the end user, these key terms in the catalogues will be implemented as clickable hotspots, offering context-specific linking and searching to other systems. Existing features and functionality will not be compromised.

The pilot system will be used to demonstrate and evaluate the effectiveness of reimplementing existing search tools and entry points within the system using SPARQL, as well as creating an API enabling external services to use the same retrieval tools.

Dual input will be undertaken of over 1,140 entries from CALM and AdLib from six partner institutions, including editorial confirmation of ISAD(G) compliance and creation of UKAT and NCA Name Authority files.

Results and outputs will be evaluated at key milestones by a representative panel of archivists from AIM25 members and users to assess the usability and accessibility.

Outputs

· A working model of an enhanced AIM25 web application, for demonstration and evaluation purposes, to include SPARQL APIs; reimplementation of existing end-user tools for searches, views and queries using RDF query tools and AJAX.

· AIM25 authority metadata in linked data format, published with an Open Metadata licence, including SKOS implemenation of the AIM25 thesaurus data;

· An AIM25 data profile based on the public schemas and ontologies identified for each domain (eg.DBpedia) and a URI scheme for entities in the AIM25 namespace;

· Reimplementation of existing AIM25 data creation tools to include RDFa creation, assisted by natural language processing of catalogues via the GATE service;

· A published report detailing ongoing and summative evaluation of the techniques used and final outputs;

· Dissemination activities for the AIM25 partnership, wider archives and access and discovery communications;

· Optimised user searching of AIM25.

Wider Benefits to Sector & Achievements for Host Institution

Among the contributions the project will make to the sector and host institution are:

· Make open metadata about archives held in libraries, museums and archive repositories available through the delivery of an open, running pilot system demonstrating an enhanced version of the AIM25 system featuring embedded RDFa, a SPARQL-based query engine and SPARQL endpoint API. The records in the pilot system will number 1140 (increasing to 16,140 when the project outputs are implemented for the live AIM25 system).

· Make the rich, validated and reviewed authority datasets of AIM25 available in an open format, under open licensing terms, for reuse by the archival and wider community. These include tens of thousands of entries including thesaurus terms (UKAT based, with local and MeSH additions), and personal, place and corporate names structured to NCA rules.

· Deliver a detailed account of the process and outcomes of creating and implementing linked data profiles for ISAD(G)/EAD based archival metadata and offer a clear articulation of how established descriptions and authority metadata standards may be delivered and maintained as open metadata

· Provide a coherent analysis and examples for the archival and wider access and discovery community of the value, effectiveness and potential of the approach to delivery using RDF, in terms of widening access and deepening use and providing and opportunity to learn how the approach optimises the use of archival staff time.

· Produce knowledge and practice that enhances and optimises AIM25, including a working model and which may be of benefit to the other institutions holding archives.

· Deliver optimised user searching tools and techniques for use in the AIM25 system that AIM25 will commit to implementing in its live system as soon as possible after the completion of the project. (A full, live launch across AIM25 has been excluded from the project scope owing to the limited timescale available.)

Risk analysis

Risk | Probability | Severity | Score

| Archives to prevent / manage risk |

Difficulty in recruiting and retaining staff | 1 | 3 | 3 | Most staff are already employed by partners and this time will be bought out. The project will also distribute knowledge throughout the project to limit the effects if a staff member leaves. Given the short duration of the project gaps will be filled by the use of agency staff or internal secondments |

New partners are unable to supply numbers of descriptions | 2 | 2 | 4 | Utilise new accession material from existing partners. Fallback on existing data.

|

A complete testbed and evaluation cannot be implemented within the time frame | 2 | 2 | 4 | Project management team will closely monitor progress of objectives and outputs. If necessary, with the agreement of the Programme Manager, some activities can be re-scoped to ensure an effective outputs are achieved. |

Failure to meet project milestones | 2 | 3 | 6 | Produce project plan with clear objectives. Continuous project assessment and close communication between project manager, technical leads, and JISC programme manager to ensure targets are realistic, achievable and focuse on project goals. |

IPR

IPR in all reports and other documents produced by the project will be retained jointly by King’s College London and ULCC but made freely available on a non exclusive license as required/advised by JISC. All software and data created during the project will be made available to the community on an open licence. We will respect the licence model of all third parties and during the project, most of which is made available under open source licences.

Project team relationships and end user engagement

The project will be overseen by a board comprising: Patricia Methven, Director of AIM25 (Chair); Kevin Ashley (Director, Digital Curation Centre); Mark Hedges (Deputy Director, CeRCh), Geoffrey Browell, Senior Archivist (King’s College Archives Services), Richard Davis (ULCC Digital Archives), and five nominated members of AIM25 reflecting new and existing partners. Input from other leading figures from JISC digital archives projects will be invited. The project will be managed by Geoff Browell with specialist and technical support from Richard Davis and Gareth Knight. Project staff will be ex officio members.

End-user Engagement

The project will establish a project blog to record progress and invite comment. The project team will work proactively with other RDTF activities and projects, including LOCAH and CHALICE, to identify synergistic goals and approaches. We will also work with the Open/Linked Data and Semantic Web communities to ensure the maximum dissemination opportunities for outputs, and for developing the new AIM25 API. Services such as LinkedData.org and PTWS.com will be used to publicise the availability of the data. Project outputs will be made available on the project website. Dissemination to the wider archival, museum and library will be offered through professional conferences and press of ARA, CILIP, RLUK, SCONUL and the Museums Association. Websites such as Culture24 and Museums, Libraries and Archives Council will also be notified. A regional dissemination event will be hosted by the AIM25 partnership in addition to hosted JISC events.

Timeline, workplan and methodology

Work package 1: Project management

This covers management activity throughout the project. It will assemble the project team; prepare the detailed project plan; establish the steering group; and agree the configuration of the project testbed. Cross-institutional, cross-partnership involvement will require close liaison between all partners, including existing AIM25 partners. There will be monthly meetings, at least four focus groups, two from each of the user and archival communites, to undertake the evaluation and ad hoc communication.Deliverables: Detailed project plan; progress and risk assessment reports; project and focus group meetings; exit and sustainability plan; ongoing coordination; liaison with JISC programme manager. Led by King’s College London Archives.

Work package 2: Testbed record selection and creation

Import into existing AIM25 of 1140 ISAD(G) new compliant collection (fonds) descriptions directly from propriety software, CALM or AdLib as appropriate through an established automated ingest protocol developed in association with the Archives Hub. The entries will cover the full archival holdings of the National Maritime Museum (700 collections), and the most significant records of Zoological Society of Great Britain (100), and the British Postal Museum (100). Those for the London Boroughs of Hammersmith and Fulham (100 collections representing 8% of collection level descriptions) and Wandsworth (100 collections representing 80% of their collection level descriptions), de facto the collections regarded by custodians of significant wider interest and those which have been prioritised for cataloguing (the Borough percentages do not reflect physical extent). An additional 40 new descriptions will be added by King’s College London representing accessions for 2009/10, 2.5% percentage of the full total for King’s already available on AIM25. Name authority and subject terms will be added for these entries in the normal way through experienced externally contracted staff. Collections are defined accordingly to their provenance and range from one to a thousand boxes.Deliverables: Creation and configuration of collection descriptions for testbed content. Led by King’s College London Archives.

Work package 3: Metadata profiling and processing

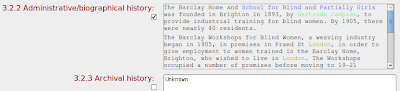

Analysis of testbed materials to define metadata requirements. This will include a review of relevant and recent outputs in the field, such as LOCAH, CHALICE. To drive out the rich seams of information in the narrative texts of the ISAD(G) descriptions (including personal and corporate names, place names and dates) the project will use GATE (General Architecture for Text Engineering) – a Java-based natural language processor developed by the University of Sheffield - to parse unstructured content and identify key entities. The outputs of GATE processing will be evaluated in conjunction with existing authority records in AIM25. A URI scheme will be defined to enable the resulting metadata to be published as open data. Entities will be tagged and identified with a URI and marked-up text will be exported to EAD. Deliverables: Creation of an RDF enriched corpus; creation of a URI scheme utilising GATE outputs and existing authority records within AIM25; creation of style sheets to transform GATE outputs to EAD; definition of requirements for RDF triple store. Led by King’s College London CeRch.

Work package 4: Implementation

Implementation of WP3 recommendations within a copy of the current AIM25 system. This will include RDF triple store, re-implementation ofe-existing search tools and entry points within the system using SPARQL, and creation of API enabling external services to use the same retrieval tools. Implementation of a tool to support highlighting of key terms in cataloguing as clickable hotspots, offering content-specific linking and searching to other systems with Web APIs likely to be of use to researchers/end-users. Convert AIM25 authority records to RDF and publish as open metadata. Define and implement enhanced AIM25 browsing interface, date entry interface and APIs. Deliverables: Working model of RDFa-enhanced AIM25 system (including end-user and data-entry enhancements); tools to create and publish open metadata; published open metadata and exemplar for evaluation. Led by ULCC

Work package 5: Evaluation

Evaluation of outputs of WP3 and WP4 using statistical assessment, web analytics and structured survey techniques. Conduct of two focus groups with archivists, new and existing AIM25 partners, and two drawn from academic users from a variety of disciplines to compare existing AIM25 and open metadata AIM25 searches. Deliverables. Definition of evaluation approach; statistical user and community evaluation of approach to open metadata, GATE processing and enhancements. Led by King’s College London Archives Services.

Work package 6: Dissemination

The project will establish a project blog to record progress and invite comment. The project team will work proactively with other RDTF activities and projects, including LOCAH and CHALICE, to identify synergistic goals and approaches. We will also work with the Open/Linked Data and Semantic Web communities to ensure the maximum dissemination opportunities for outputs, and for developing the new AIM25 API. Services such as LinkedData.org and PTWS.com will be used to publicise the availability of the data. Project outputs will be made available on the project website. Dissemination to the wider archival, museum and library will be offered through professional conferences and press of ARA, CILIP, RLUK, SCONUL and the Museums Association. Websites such as Culture24 and Museums, Libraries and Archives Council will also be notified. A regional dissemination event will be hosted by the AIM25 partnership in addition to hosted JISC events.

2011 | Feb | Mar | Apr | May | Jun | July |

WP1 | X | X | X | X | X | X |

WP2 | X | X | X |

|

|

|

WP3 | X | X | X |

|

|

|

WP4 |

|

| X | X | X | X |

WP5 |

|

|

|

| X | X |

WP6 | X |

|

| X |

| X |

Budget

Directly Incurred Staff | August 10– July 11 | August 11– July 12 | TOTAL £ |

Grade 6, 10 days & 9% FTE | £2340.80 | £ | £2340.80 |

Grade 6, 27 days & 24.5 %FTE | £5526.90 | £ | £5526.90 |

Grade 8- point 46, 8 days, 7%FTE | £2616.00 | £ | £2616.00 |

Grade 7-point 43, 29 days, 26% FTE | £9483 | £ | £9483 |

Indexer A, Grade 2, 6 months, 35 % FTE | £3545.30 | £ | £3545.30 |

Indexer B, Grade 2, 6 months 35% FTE | £3545.30 | £ | £3545.30 |

External Contractor | £2679.42 | £ | £2679.42 |

Total Directly Incurred Staff (A) | £29736.72 | £ | £29736.72 |

|

|

|

|

Non-Staff | August 10– July 11 | August 11– July 12 | TOTAL £

|

Travel and expenses | £800 | £ | £800 |

Hardware/software | £1000 | £ | £1000 |

Dissemination | £800 | £ | £800 |

Evaluation | £400 | £ | £400 |

Other | £1000 | £ | £1000 |

Total Directly Incurred Non-Staff (B) | £ 4,000 | £ | £ 4,000 |

|

|

|

|

Directly Incurred Total (C) (A+B=C) | £33,736.72

| £ | £33,736.72

|

|

|

|

|

Directly Allocated | August 10– July 11 | August 11– July 12 | TOTAL £

|

Staff Grade7 –point 38, 6 months, 20% FTE | £5317.33 | £ | £5317.33 |

Estates | £4956.00 | £ | £4956.00 |

Other | £ | £ | £ |

Directly Allocated Total (D) | £10273.33 | £ | £10273.33 |

|

|

|

|

Indirect Costs (E) | £30,734.68 | £ | £30,734.68 |

|

|

|

|

Total Project Cost (C+D+E) | £74,744.73 | £ | £74,744.73 |

Amount Requested from JISC | £40000 | £ | £40000 |

Institutional Contributions | £34744.73 | £ | £34744.73 |

|

|

|

|

Percentage Contributions over the life of the project | JISC 54% | Partners 46 % | Total 100% |